- Sponsored

- Sponsored Content

AI bridges data-sharing gaps for faster disaster response

When torrential rains struck central Texas last July, flash floods overwhelmed rural communities in hours — and responders lacked the complete view they needed to act quickly. Local sheriffs had one set of reports, state emergency managers had another, while federal weather and relief agencies had different data. By the time situational updates reached incident commanders, much of the information was already too late to be actionable.

That lag in disaster response—between when critical data is collected and when it can be acted upon—can cost lives and delay urgent relief efforts. A new generation of technology solutions, such as AI-enabled data fabrics, is helping close that gap by unifying disparate data streams and bringing decision-making closer to the point of need.

Tackling interoperability

Despite improvements in forecasting, predicting the local impact of disasters and coordinating responses remains a challenge. A long-standing reason is the extent to which jurisdictions rely on different radios, databases, and reporting formats.

In Texas, after-action reports from both floods and hurricanes highlighted how responders often couldn’t even talk to each other over radio networks. The same fragmentation affects data and applications: state police, public works, and local sheriffs often maintain separate systems that do not connect.

Compounding this is how information has traditionally flowed: 911 calls, sensors, social media, and field reports move up to a command hub before filtering back down to teams. That model creates bottlenecks and delays decision making.

“A gigantic complaint in the recent flooding was, ‘We’re not hearing from the people we need to hear from the way we need to hear from them.’ And it’s all because they [federal, state and local authorities] have different technologies,” said Joshua Hoeft, director of state and local government initiatives at General Dynamics Information Technology (GDIT).

This is where the concept of a data fabric becomes crucial. Data fabrics streamline information from different sources, making it easier to pull from diverse formats and feed them into a common operating model, explained Steven Switzer, Director of AI for Federal Civilian at GDIT. “Instituting a data fabric helps all that data flow freely,” he said.

AI-enabled decision making

What’s changing is the ability to use purpose-built artificial intelligence (AI) models to access and synthesize information from spreadsheets, databases, documents, and real-time sensor feeds. These models help establish consistency and a common picture for all stakeholders.

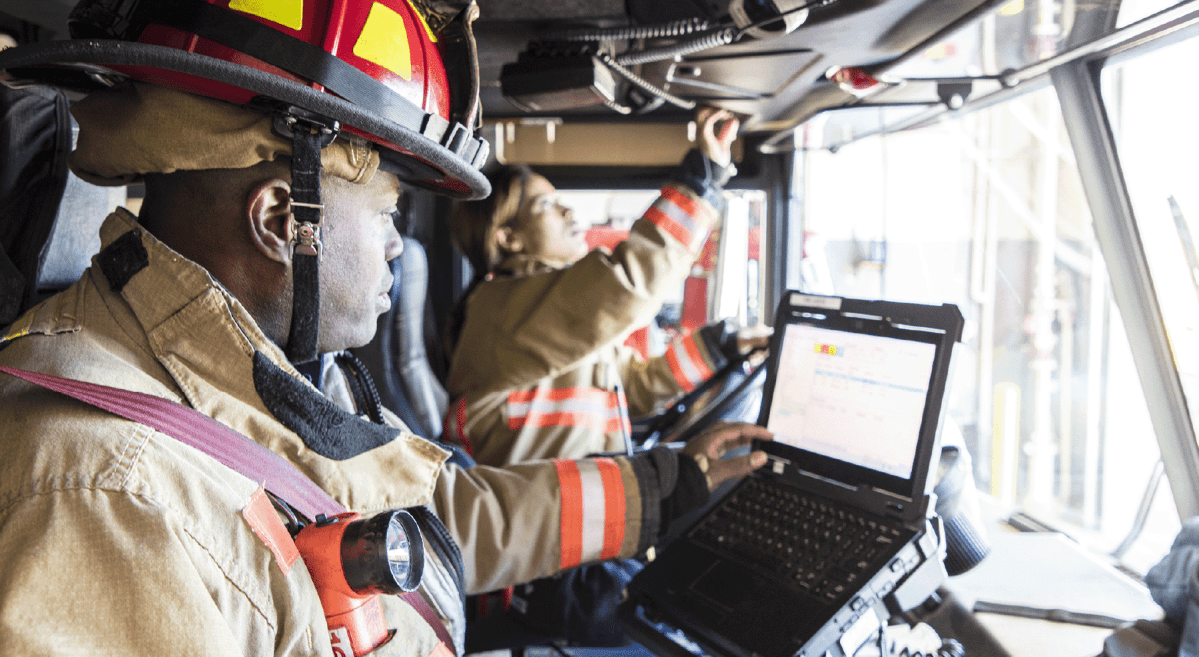

By decentralizing information flow, AI-enabled data fabrics also put immediate analysis into the hands of those directly on the mission, whether it’s identifying affected areas, locating survivors, or staging resources.

“Instead of sending everything up to a centralized command center, the goal now is to bring decision-making as close to the point of mission as possible,” said Switzer. “That means putting intelligence directly into the hands of first responders, where reaction time is critical.”

Beyond faster reactions, AI is also being applied to predict how wildfires or floods might unfold. Models can scan satellite imagery for heat signatures that suggest wildfire ignitions or forecast flood zones based on rainfall and terrain. That enables agencies to pre-stage supplies, reinforce infrastructure, or even avert disasters before they escalate.

Switzer cited work piloted in California, where infrared imaging and AI detection identified wildfire hotspots before they spread into populated areas. “It’s about getting ‘left’ of the event,” he said. “With the correct predictive models, you can plan for disasters instead of being surprised by them.”

Lessons from the defense sector

The foundation for linking diverse communication and data systems with AI started nearly ten years ago in the defense sector. Recognizing the need for soldiers to act locally in disconnected, degraded, intermittent, and limited (DDIL) environments, the Department of War invested heavily in secure, interoperable systems.

Systems integrators play a critical role. Rather than asking agencies to rip and replace IT, integrators can build data fabrics that translate and connect disparate formats into a common operating framework.

“You don’t want agencies spending all their time wrangling data,” Switzer explained. “AI can summarize, contextualize, and give them what they need so they can focus on the mission — saving lives. Our role as a system integrator is important because we see the full picture — the full enterprise and all those disparate systems. Our goal and our job is to make sure that those work together.”

The takeaway for civilian agencies is that AI isn’t a stand-alone tool but part of an integrated process combining software, security, infrastructure, and training. “The sooner states integrate AI into their existing IT and operations practices, the more successful they’ll be,” Switzer said.

Hardware platforms are also evolving to support this shift. GDIT’s Raven mobile command vehicle, for example, brings satellite-connected AI and communications interoperability directly into disaster zones.

“If a flood knocks out power and internet across a region, you can drive Raven in and reestablish critical operations,” Hoeft said. From drone management to secure data collection, such mobile edge hubs enable responders to operate independently of traditional infrastructure.

Staying flexible

As with all IT developments, successful implementations depend on a longer-term vision, even amid unpredictable events. Sustaining AI systems requires regular updates, governance agreements, and shared funding. Predictive models, in particular, must be retrained continuously as conditions evolve.

Cost-sharing is another factor. State, local, and federal agencies must collectively invest in interoperable platforms rather than each experimenting in isolation. That requires champions at both executive and mission levels. “If it’s only driven from the top, you risk building tech without a mission,” Switzer said. “If it’s only bottom-up, you risk roadblocks and limited reach. You need both.”

The urgency has only grown. As disasters intensify, delays in coordination can multiply costs. At the same time, advances in AI accessibility have made it feasible to embed intelligence across many missions – not just IT.

“This is what’s different today than even a year ago,” Switzer said. “AI isn’t just in the hands of technical organizations anymore. It’s moving into non-technical missions, where it can directly augment decision-making.”

Learn more about how GDIT deploys AI capabilities that bring what matters into focus.