San Jose, Calif., publishes generative AI guidelines

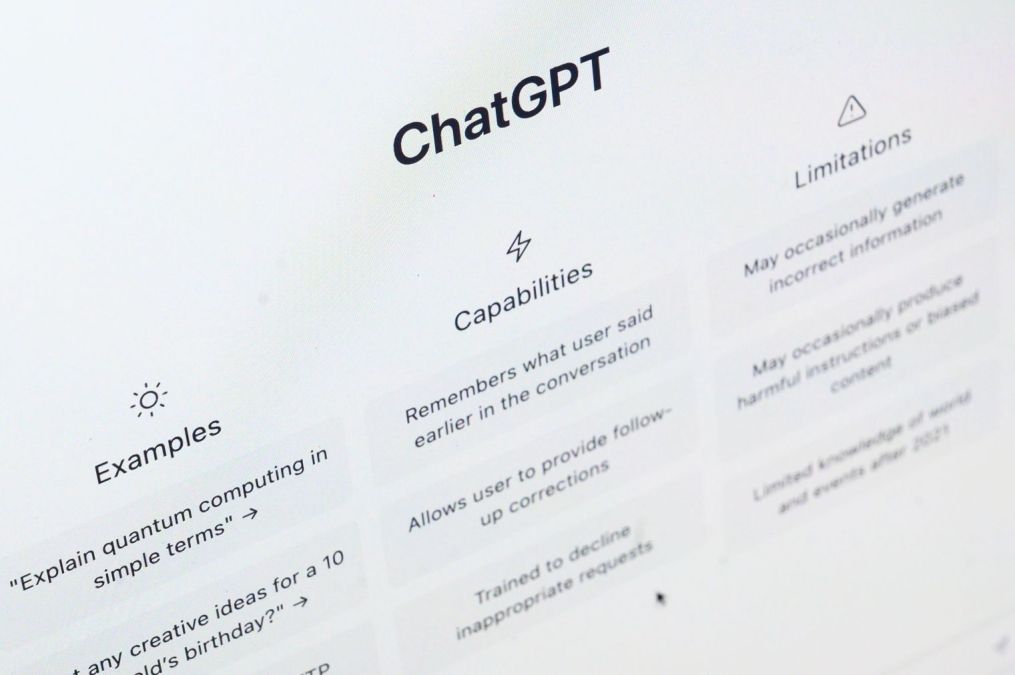

San Jose, California, last week published new guidelines that describe how city employees are permitted to use generative AI tools like ChatGPT in their daily work.

The 23-page document includes a set of guiding principles — such as privacy-first, accurate and equitable — along with processes and instructions for city employees, a model for understanding the risks associated with using generative AI tools and a primer on issues surrounding the technology, such as copyright considerations. San Jose’s new governance document follows similar efforts in Boston and Seattle to shore up governance of a technology that’s been quickly adopted inside government offices.

Albert Gehami, San Jose’s city privacy officer, told StateScoop the guidelines are part of the city’s broader effort to ensure that potentially sensitive data is handled carefully.

“The goal is just making sure that the data we collect is to support our residents, that we look back 20 years from now at the technology and the systems we put in place and think, ‘Yeah, that was a good idea,’” Gehami said. “This involves mitigating bias, making sure the systems we’re putting in are effective, the data’s only being shared with the people we intended to and most importantly we are communicating with our residents, which we have found to be one of the most important parts throughout the last couple years.”

San Jose’s document includes five key guidelines, including an admonition that information entered into tools like ChatGPT can be viewed by outside parties. It also advises staff to “review, revise and fact check via multiple sources” the tool’s output and to “cite and record” all generative AI use, including filling out a special form for city records. Lastly, staff are directed to create dedicated accounts for city use and to check the guidelines for changes each quarter.

Gehami said the new guidelines were created with input from the city’s digital privacy advisory taskforce, which consists of researchers from groups like the American Civil Liberties Union, professors from universities such as Stanford and Cornell and officials at nearby local governments such as Los Angeles County. Publishing the guidelines, he said, is the first step in getting the public involved as the city continues to hone its governance.

After ChatGPT launched last year, state and local officials across the U.S. began discussing how to encourage the responsible use of generative AI in government. Executive appointees and lawmakers alike are pushing for stronger governance of a technology that, by many reports, government staff began using almost immediately.

Gehami said he’s seen in many cities that privacy chiefs are being required to make AI a central part of their work.

“The privacy function has been almost ‘voluntold’ in many cases to help advise on the AI growth in a lot of cities,” he said. “I’ve been seeing this in conversations in major cities across the west coast, just because in the privacy space we’re already thinking very heavily about how the data’s being used, processed and what the product of it is from a lot of different perspectives, so it was sort of a natural evolution.”

In San Jose’s case, Gehami said generative AI doesn’t fundamentally change how the city functions, but that it’s an important technology for him to keep tabs on.

“The city is run by people and it will continue to be run by people, but this provides an additional tool in the same way the computer or the internet provided a tool that staff and folks can use to provide better services, faster services than we would have without the tools,” he said.