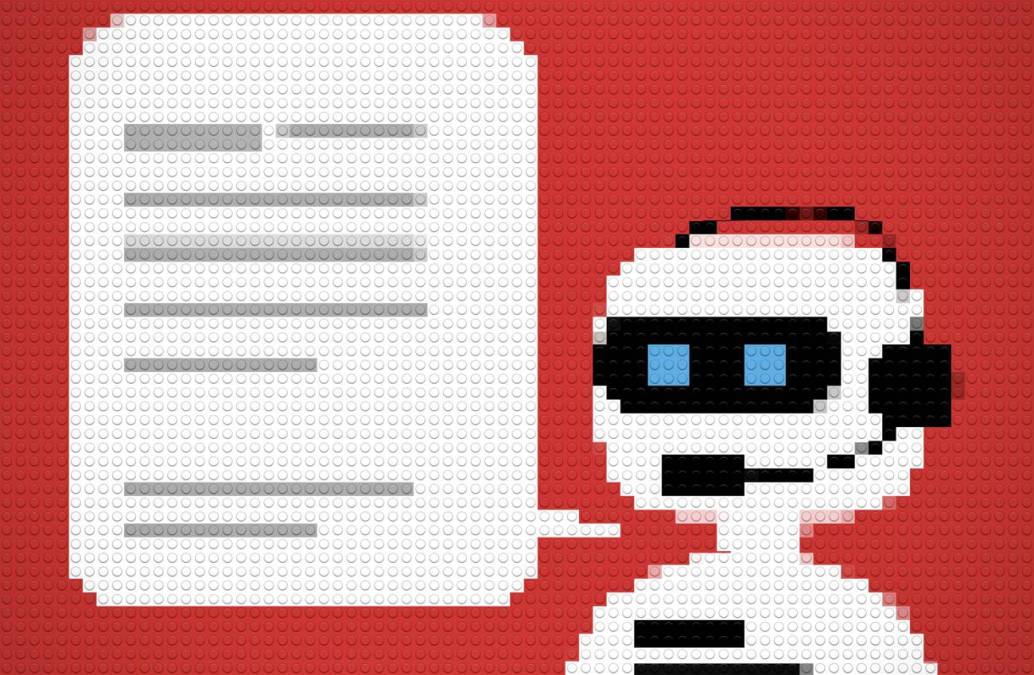

California passes bill regulating companion chatbots

The California legislature approved a bill Tuesday that would place new safeguards on artificial intelligence-powered chatbots to better protect children and other vulnerable users. The bill now heads to Gov. Gavin Newsom’s desk.

Senate Bill 243, which now heads to Gov. Gavin Newsom’s desk, was introduced in July by state Sen. Steve Padilla. It would require companies that operate chatbots marketed as “companions” to avoid exposing minors to sexual content, regularly remind users that they are speaking to an AI and not a person, as well as disclose that chatbots may not be appropriate for minors.

“As we strive for innovation, we cannot forget our responsibility to protect the most vulnerable among us,” Padilla said in statement. “Safety must be at the heart of all developments around this rapidly changing technology. Big Tech has proven time and again, they cannot be trusted to police themselves.”

The push for regulation comes as tragic instances of minors harmed by chatbot interactions have made national headlines. Last year, Adam Raine, a teenager in California, died by suicide after allegedly being encouraged by OpenAI’s chatbot, ChatGPT. In Florida, 14-year-old Sewell Setzer formed an emotional relationship with a chatbot on the platform Character.ai before taking his own life.

A March study by the MIT Media Lab examining the relationship between AI chatbots and loneliness found that higher daily usage correlated with increased loneliness, dependence and “problematic” use, a term that researchers used to characterize addiction to using chatbots. The study revealed that companion chatbots can be more addictive than social media, due to their ability to figure out what users want to hear and provide that feedback.

Setzer’s mother, Megan Garcia, and Raine’s parents have filed separate lawsuits against Character.ai and OpenAI, alleging that the chatbots’ addictive and reward-based features did nothing to intervene when both teens expressed thoughts of self-harm.

The California legislation would also mandate companies program AI chatbots to respond to signs of suicidal thoughts or self-harm, including directing users to crisis hotlines, and requires annual reporting on how the bots affect users’ mental health. The bill would allow families to pursue legal action against companies that fail to comply.